The drilling industry has changed considerably in the more than 150 years since the “Drake Well” was drilled, but the principle is still the same: crush rock to make a big hole in the ground. Wells have gotten exponentially deeper and more complex, necessitating significant technological advances to allow production of a commodity that is still the cheapest source of energy by far. Oil and gas drilling is also generally done in remote locations, like the middle of the ocean or desert, which makes connectivity a challenge when thinking in terms of data and information.

Other unique challenges have slowed down the drilling industry’s progress when it comes to taking full advantage of data or Big Data. Looking at other industries that were able to take advantage of their data, like the automobile industry, the clear differentiators are the volume of data and ease of access. When oil was at its peak in 2014, there were around 1,900 rigs operating in the U.S., which is a huge number for the oilfield; in comparison, Ford sells around 2,500 F150s per day. So, a data scientist wanting to build training data for machine-learning engines for failure predictions will probably want to do it on the F150s. Not only is the sample size significantly larger for the F150s, but they also generally operate under the same conditions, with variance only occurring as an outlier. While all drilling rigs are also effectively doing the same thing, crushing rock and pushing it up to the surface in Oklahoma is very different from crushing rock in North Dakota.

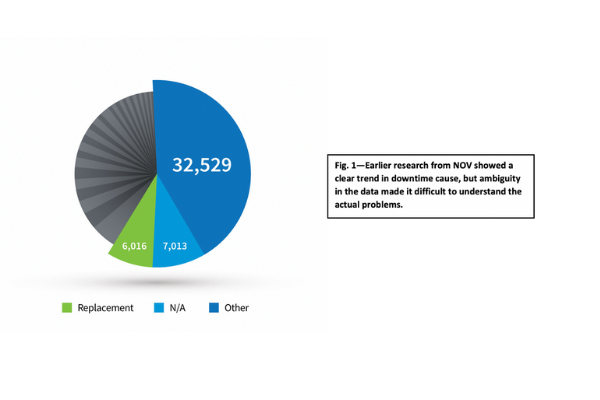

Sensors are necessary for data acquisition, which is central to the concept of Big Data. The challenge is that sensors must be able to survive the harsh environments and conditions—like high pressures or temperatures—encountered when downhole. The shock and load that the equipment a drilling rig sees is extreme, so companies that build IoT sensors for appliances that sit in a climate-controlled environment might be unable to produce sensors durable enough to use in the drilling industry. The other component of data acquisition is the human side of it, and there must be a lot of discipline and focus around how the data is entered. In research that NOV did in 2009 with drilling rig data from five drilling contractors and 100 rigs, there was a definite trend on the largest cause of downtime—it was marked as “Other,” while the second largest cause was “N/A” (see Fig. 1). Ideally, the people involved would have more accurately identified the downtime with a specific root cause, which would have enabled massive reductions in downtime. Fortunately, companies and software solutions are making it harder to input bad data, which has led to a substantial reduction of bad data on that front. The current generation of control systems are also smart enough to provide much of that data automatically without human input; that said, data quality is still something that should always be a focus point.